Data Machina #242

AI and Causality. Causal Parrots. Causal Deep Learning. Causal Agents. GenAI Guidebook. Open-source AI Cookbook. Compound AI Systems. Let's Build the GPT Tokeniser. Gemma SOTA in PyTorch.

AI and Causality. The introduction of OpenAI Sora (simulate real worlds from video understanding) has sparked a bit of a debate among some prominent AI researchers. First, What do AI researchers mean by “causal”?

Secondly: Do LLMs have causal reasoning capabilities? Can LLMs learn causality from just real world training data? Can LLMs learn, represent, and understand world models and physics?

Judea Pearl - a world’s top researchers in Probabilistic AI, Bayesian Networks, and Causal Inference- once famously said in an interview:

Deep Learning -albeit complex and non-trivial- it’s a curve fitting exercise. To build truly Intelligent Machines, teach them cause and effect.

That was back in 2018, when Judea published The Book of Why - The New Science of Cause and Effect (here's a summary). Since then, and The Attention is All You Need paper, most AI research has been focused on scaling Transformers and LLMs.

So: Do LLMs really have causal reasoning capabilities today? How limited or advanced are in terms of causal reasoning? Let’s see...

Yes. GPT-4 SOTA on causal tasks. Recently, the AI Intelligentsia has been pushing the Transformers and LLM agenda on Causality. A paper published by MS Research claiming that GPT4 achieved new SoTA on a wide range of causal tasks, sparked the debate. Paper: Causal Reasoning and LLMs: Opening a New Frontier for Causality.

No. LLMs are not causal. In parallel, some prominent AI causality researchers make it clear that what may look as causal reasoning may simply be retrieval of causal facts; memorisation from the training data. In a recent paper, researchers say that LLMs cannot be causal. Paper: Causal Parrots: Large Language Models May Talk Causality But Are Not Causal.

That group of researchers just launched a new conference: Are LLMs Simply Causal Parrots? (checkout the papers) in which different points of view will be discussed.

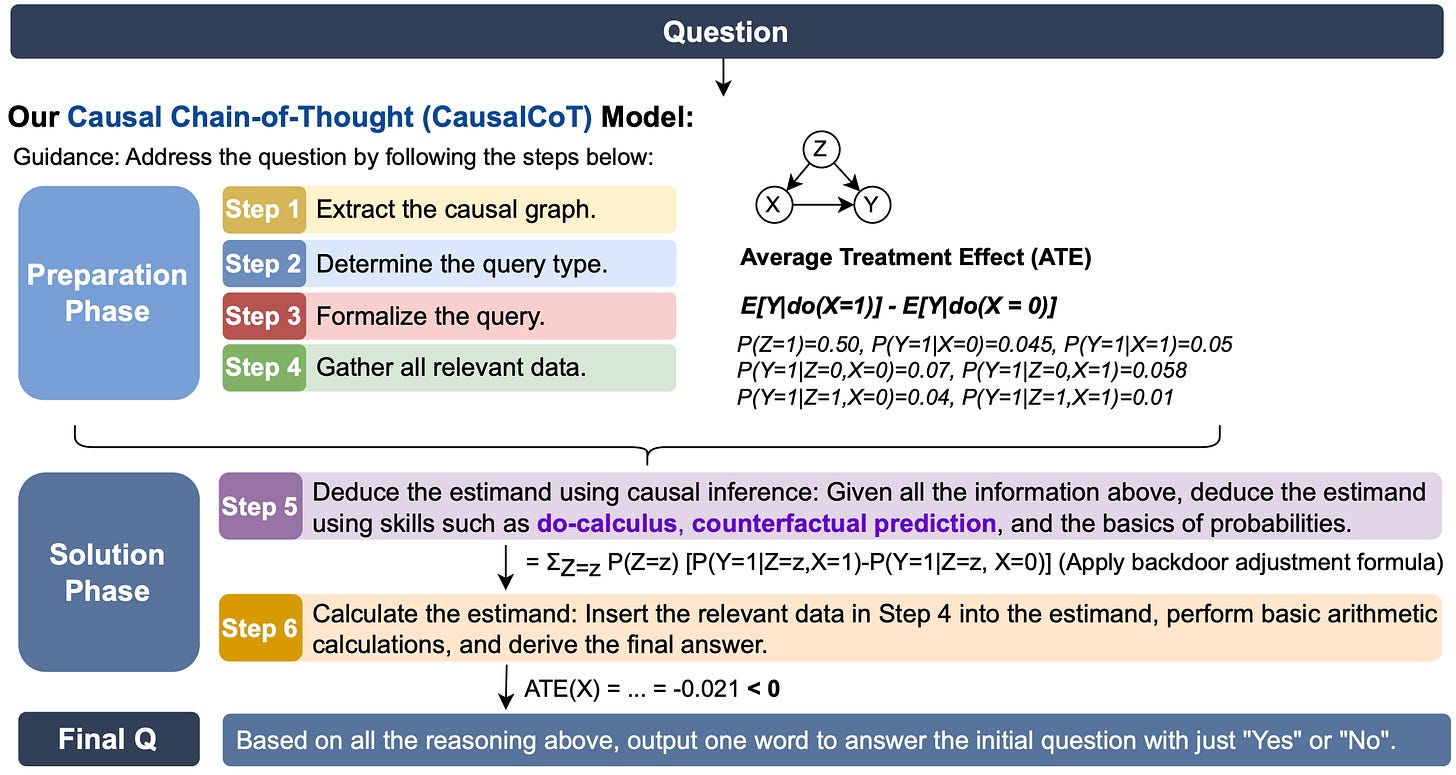

No. GPT-4 and LLMs struggle with causal & counterfactual reasoning. In this new paper, a group of researchers -using Judea Pearl’s Ladder of Causation as a benchmark- developed a Causal Chain of Thought Model, and show that LLMs may still be far from reliable causal reasoning. Checkout the paper, code, and dataset: CLadder: Assessing Causal Reasoning in Language Models.

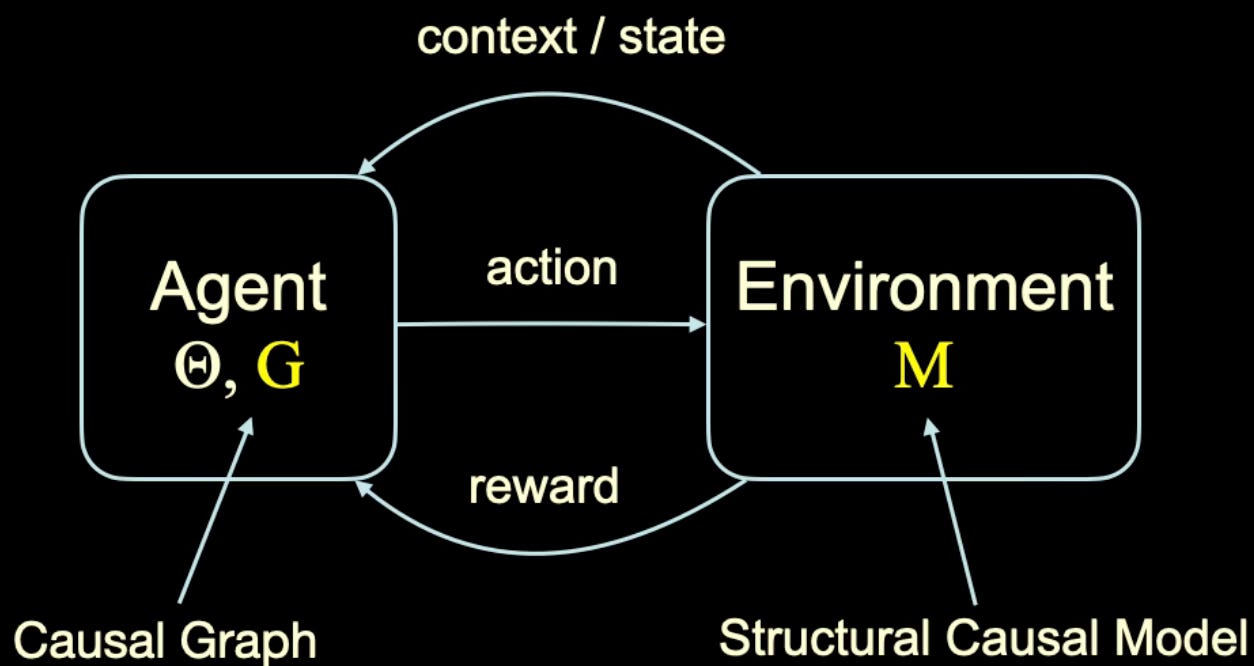

Hold on, Deepmind researchers say: Agents can learn causal world models. A team of DeepMind researchers just published a new paper in which they explain that any agent capable of satisfying a regret bound under a large set of distributional shifts must have learned an approximate causal model of the data. Paper: Robust Agents Learn Causal World Models

Causal Reinforcement Learning: The Future of AI? Until recently, research on Reinforcement Learning and Causality has evolved independently with no connection between both. Counterfactual relations is what can unite both. A group of researchers at Causal AI Lab, CU led by Elias say Causal RL = Causal Inference + Reinforcement Learning is the way forward. Checkout this ICLM tutorial: Causal Reinforcement Learning (slides and videos)

If you’re interested in Causal AI, here are a few interesting new resources:

Free video-lectures on Causality for AI & ML. A great collection of 14 video recordings on Causality and AI at TU Darmstadt. (Click on Matej’s name in the video below to get all the video lectures.)

A new free book on Causal ML. This free online book introduces a modern approach to Causal AI, directed acyclical graphs (DAGs) and structural causal models (SCMs), and presents Double/ Debiased ML. It comes packed with lab excercises, notebooks and libraries. Get it here: Causal ML Book - Applied Causal Inference Powered by ML and AI.

Causal AI and Causal Inference with Python. A new, fresh series of interviews and podcasts by Alex on: causality, causal AI, machine learning, optimisation, decision-making and Python.

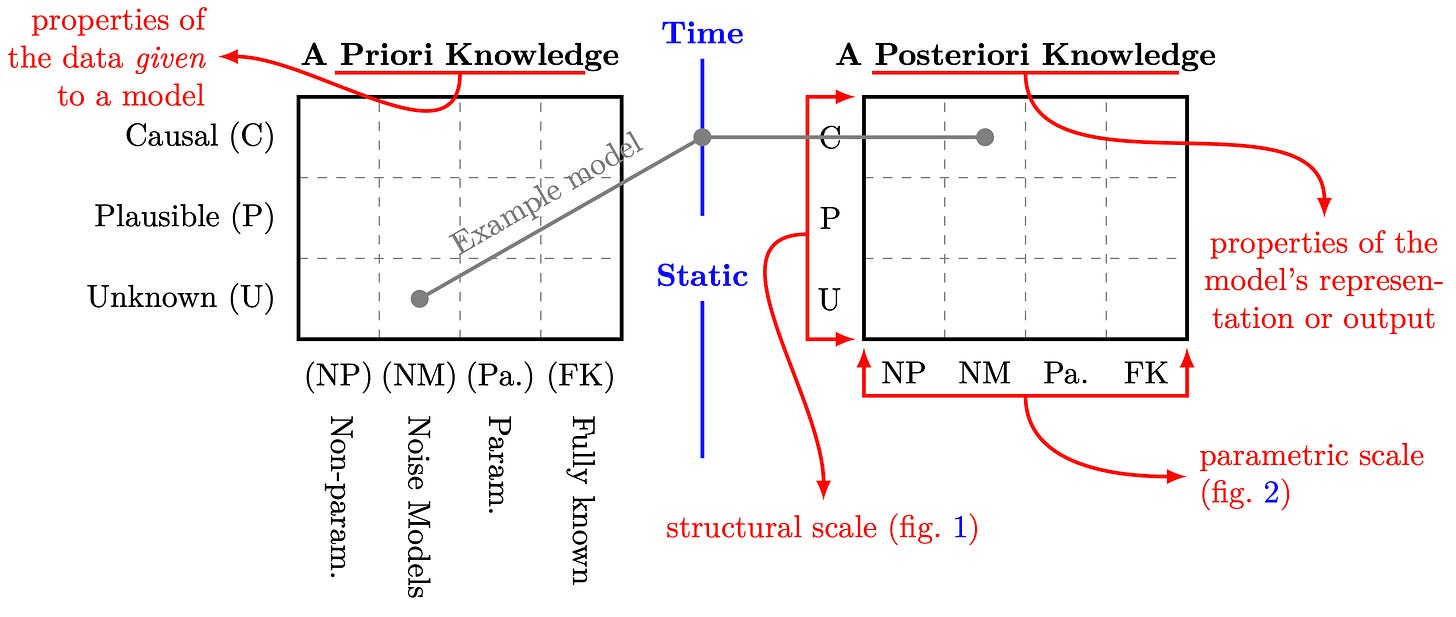

New paper on Causal Deep Learning, Feb 2024. Researchers at Cambridge Uni just revised their proposal on a causal deep learning framework that spans in 3 three dimensions: (1) a structural dimension, which incorporates partial yet testable causal knowledge (2) a parametric dimension, that capture the type of relationships among the variables of interest, and (3) a temporal dimension, which captures exposure times or how the variables of interest interact (possibly causally) over time. Paper: Causal Deep Learning.

Have a nice week.

10 Link-o-Troned

[new] OpenCodeInterpreter: Code Generation on Par with GPT-4

[new] LORALand - 25 Fine-tuned Mistral-7b Models that Beat GPT-4

the ML Pythonista

The New Google Gemma SOTA Open Models Implemented in PyTorch

Karpathy: Let's Build the GPT Tokeniser - Tokenisation :( the Root of All LLM Evils

Google Magika - Open, Fast DL Model for 99% Accurate File Type Detection

Deep & Other Learning Bits

AI/ DL ResearchDocs

PALO: Large Multimodal Model for Visual Reasoning in 10 Languages

Time Series Forecasting with LLMs: Advantages and Limitations

MLOps Untangled

data v-i-s-i-o-n-s

AI startups -> radar

ML Datasets & Stuff

Postscript, etc

Tips? Suggestions? Feedback? email Carlos

Curated by @ds_ldn in the middle of the night.