Data Machina #261

Generative AI + Time-series Forecasting? The AI Agent Engineer. An Agentic Architecture? What's Arena Learning? AlphaFold3 Visualised. GraphRAG + Neo4j. Internet of Agents. Memory3 for LLMs.

Generative AI + Time-Series Forecasting? Many world-class organisations are starting to invest in new GenAI+TS forecasting methods that involve for example: developing new specialised VAEs, using Vision-Language Models, pre-training the model with trillions of TS data points, or incorporating text embedding and tokenisation into the TS forecasting method. Checkout these 6 very recent, interesting papers that show the impressive, rapid evolution in this area.

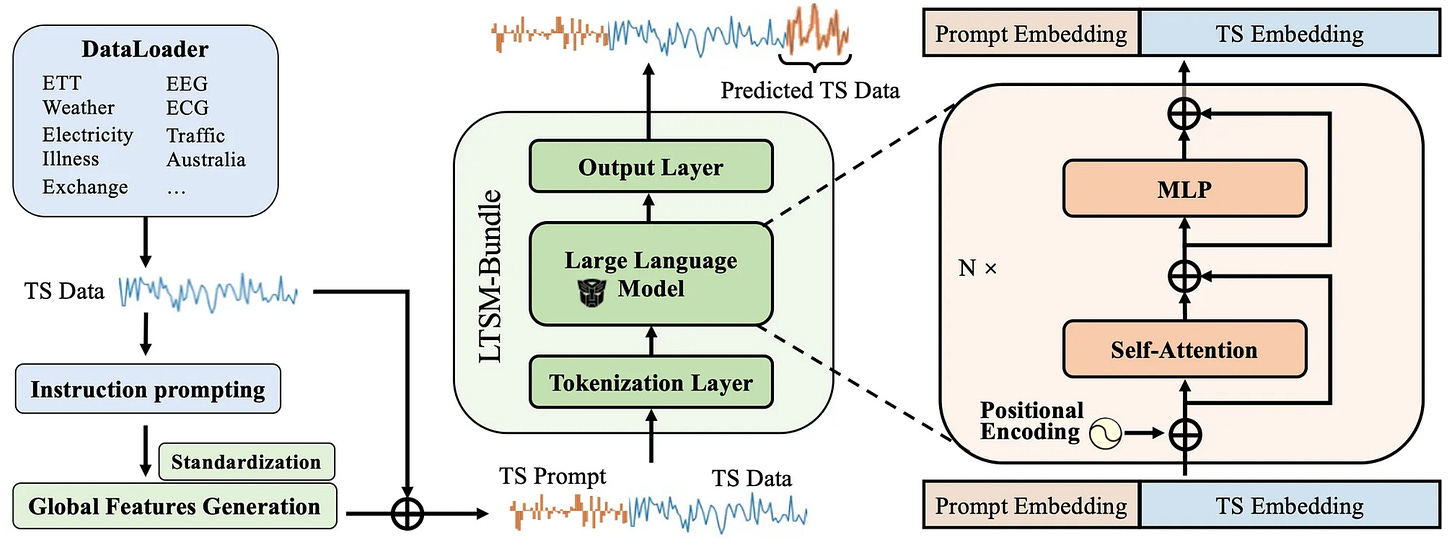

Re-programming LLMs for time-series modelling. This a great post about how researchers are trying to align the information gap between time series and natural language from every perspective of training a LLM. Re-programming a LLM for time series modelling is similar to fine-tuning it for a specific domain. This involves several key steps like: tokenisation, base model selection, prompt engineering, and defining the training paradigm. Blogpost: Time Series Are Not That Different for LLMs.

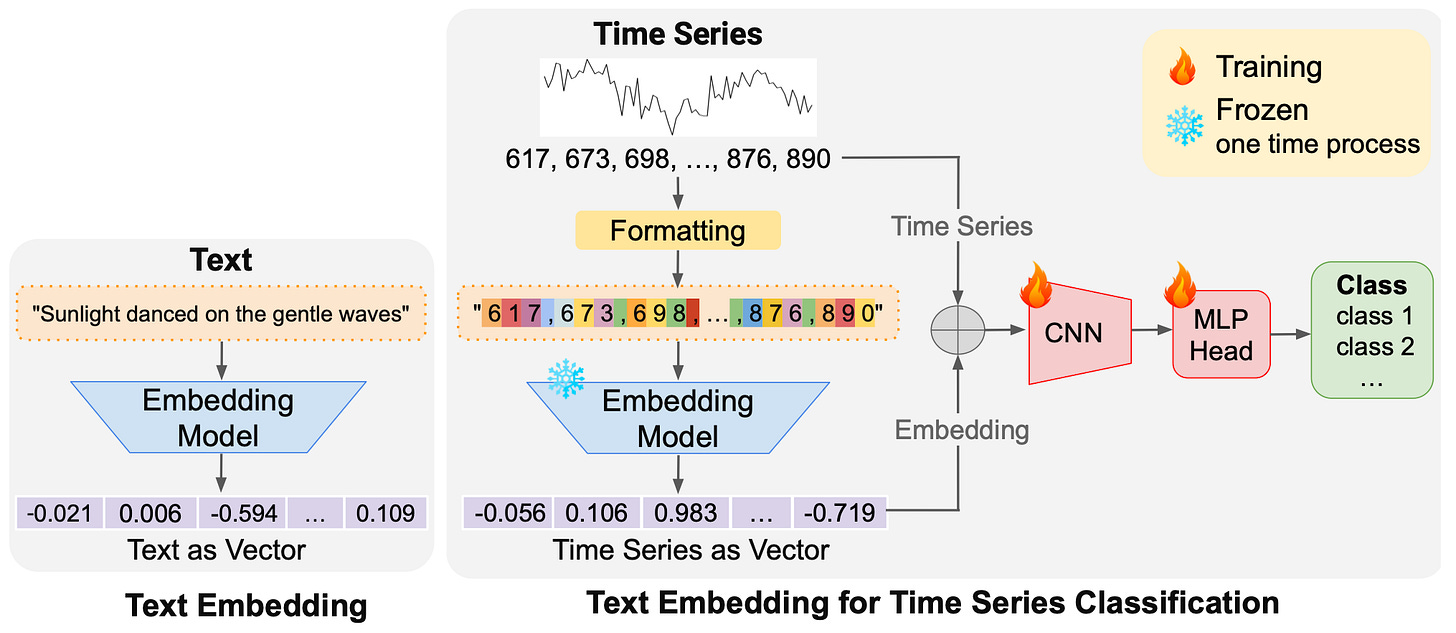

JPMorgan - Lightweight SOTA TS classification with an embedding model. This paper introduces LETS-C, a new method that addresses the issue of fine-tuning with millions of trainable parameters. The method utilises a language embedding model to embed time series and then pair the embeddings with a simple classification head composed of CNNs and MLP. LETS-C outperforms the current SOTA in TS classification accuracy but also offers a lightweight solution, using only 14.5% of the trainable parameters on average compared to the SOTA model. Paper: LETS-C: Leveraging Language Embedding for Time Series Classification.

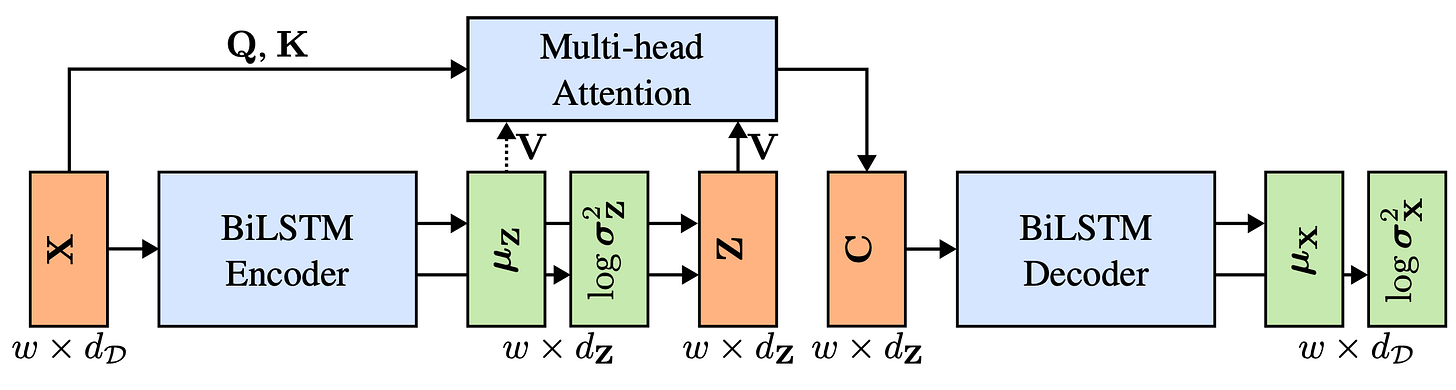

Mercedes-Benz - Automatic TS anomaly detection with a new tVAE. This paper introduces a temporal variational autoencoder (TeVAE) that can detect anomalies with minimal false positives when trained on unlabelled data. The approach also avoids the bypass phenomenon and introduces a new method to remap individual windows to a continuous time series. Using real-world industrial data, TeVAE detects 65% of the anomalies, and only 6% are wrongly classified. Paper: TeVAE: A Variational Autoencoder Approach for Discrete Online Anomaly Detection in Variable-state Multivariate Time-series Data.

Datadog - SOTA foundation model with Mixture-of-Students for observability TS data. This paper introduces Toto, the first general-purpose TS forecasting foundation model specialised in observability TS data, typically used in electricity and weather forecasting. The model was trained on a dataset of one trillion time series data points, the largest ever published. Toto outperforms existing TS foundation models on observability data, and also excels at general-purpose forecasting tasks, achieving SOTA zero-shot performance on multiple open benchmark datasets. Paper: Toto: Time Series Optimized Transformer for Observability

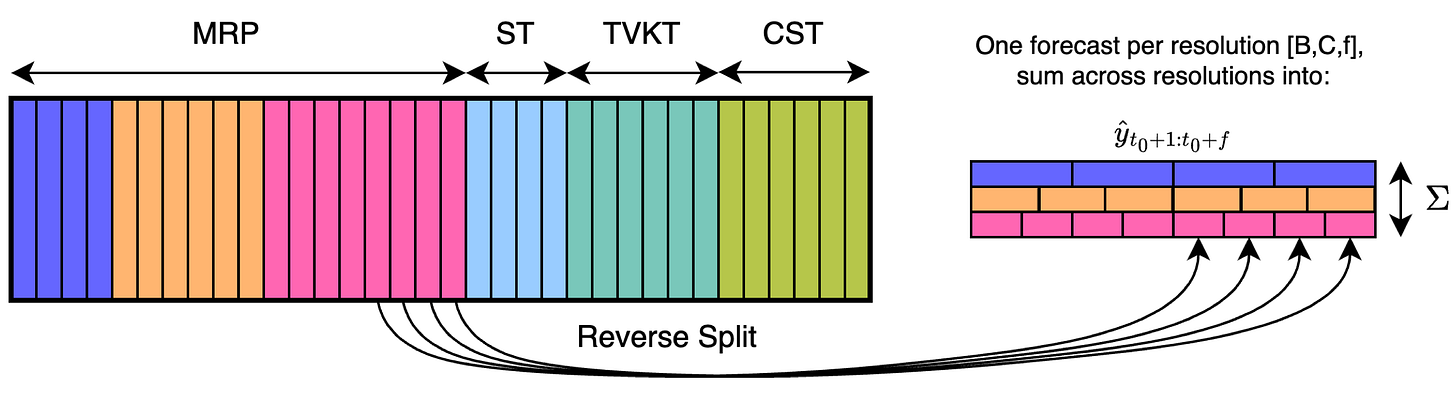

Tesco - A transformer for tokenised TS classification in price optimisation. This paper introduces a transformer architecture for time series forecasting applied to the real-world problem of price optimisation in a large retailer. The paper introduces several innovations in terms of differentiated time series patching and multiple-resolution module for time-varying known variables. Based on experiments with real-world data, the model outperforms existing in-house models and other deep learning architectures. Paper: Multiple-Resolution Tokenization for Time Series Forecasting with an Application to Pricing.

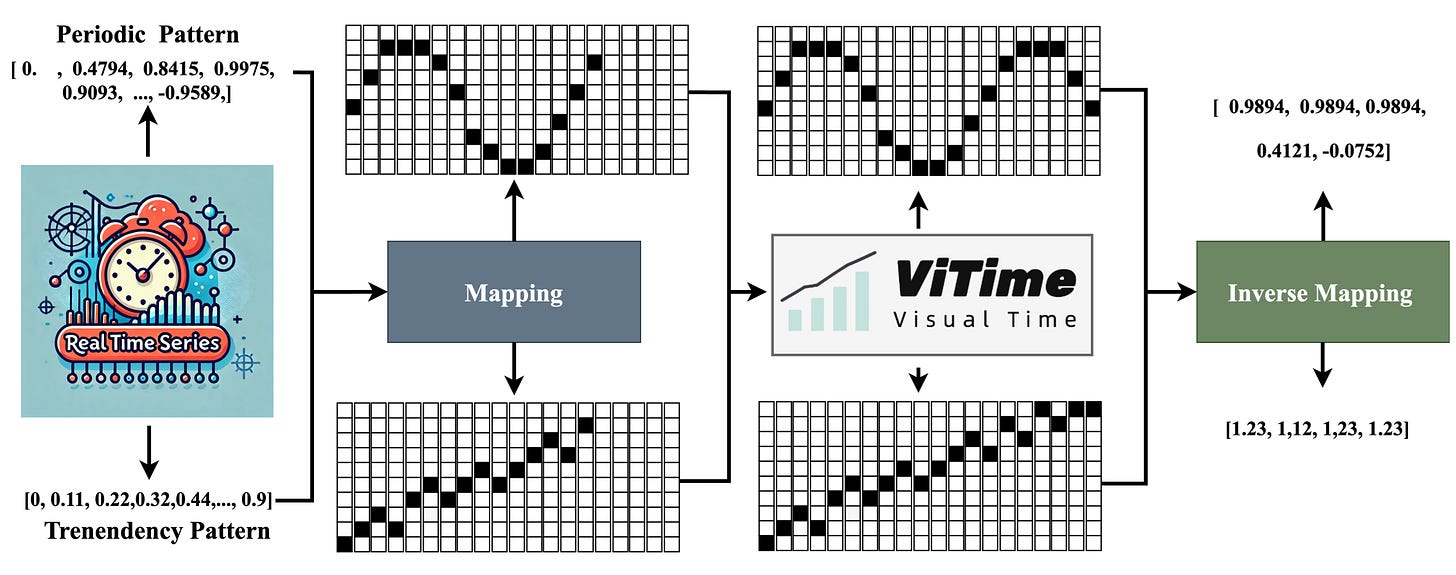

A Vision-Language Model for SOTA zero-shot TS forecasting. This paper introduces ViTime, a novel VLM foundation model for TSF forecasting. The model transforms numerical time series into binary images, converting numerical temporal correlations into binary pixel spatial correlations. ViTime overcomes the limitations of numerical time series data fitting by utilising visual data processing paradigms. ViTime achieved SOTA zero-shot performance, even surpassing the best individually trained supervised models in some situations. Paper & repo: ViTime: A Visual Intelligence-Based Foundation Model for Time Series Forecasting.

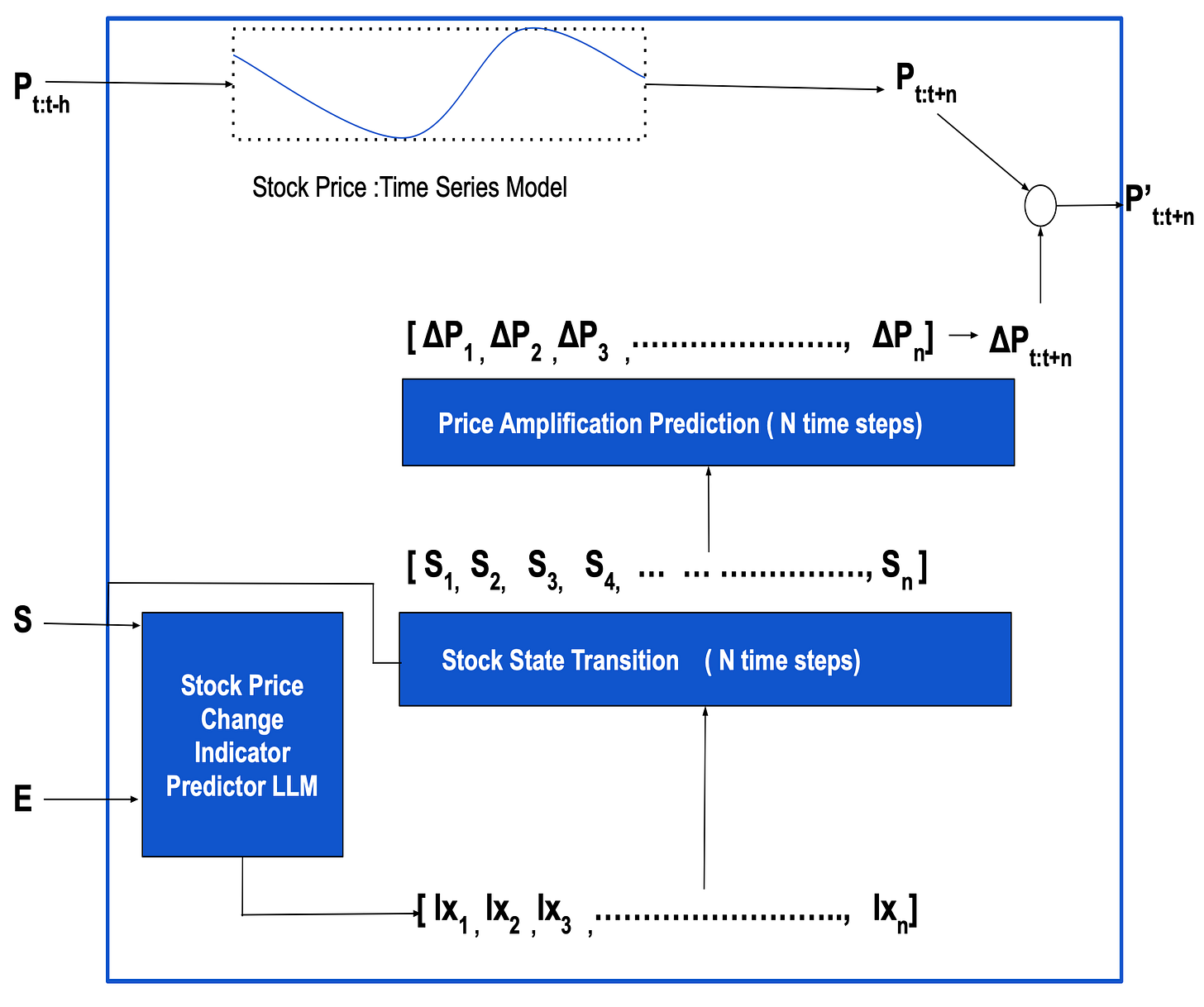

T5 (Text-To-Text-Transfer-Transformer) for short-term stock price prediction. This paper introduces TimeS, a novel method for short-term excitement prediction in stock price time series, that uses the T5 transformer. T5 uses stock, events, and sentiment data as inputs to forecast price change indicators for the subsequent n time intervals, which are then employed to determine stock states. Then the time series are updated using price amplification values derived from these stock states. Paper: Text2TimeSeries: Enhancing Financial Forecasting through Time Series Prediction Updates with Event-Driven Insights from LLMs.

Have a nice week.

10 Link-o-Troned

the ML Pythonista

Deep & Other Learning Bits

AI/ DL ResearchDocs

MLOps Untangled

ML Datasets & Stuff

Emilia: An Extensive, Multilingual Dataset for Large-Scale Speech Generation

SkunkworksAI: A Synth Dataset of Reasoning Chains Across a Variety of Tasks

Postscript, etc

Tips? Suggestions? Feedback? email Carlos

Curated by @alg0agent in the middle of the night.

I love your newsletter, I've been subscribed for 2 years or more now I think. There are many newsletters, especially after the gen AI hype but I always found yours to be the best (no spam, no hype, a lot of useful ressources /link, nice tone etc.) .

So 1. thank you very much for all your past work and dedication

2. what happened to you lately ? You stopped ? :(((

Much love.