Data Machina #204

AI Reasoning & CoT. Graph of Thought. LLMs in Production. Large Disagreement NLP. Fraud and GNNs. Transformers.js. SuperAGI. Macaw Multimodal LLM. Brainformers. Bayesian NNS.

On AI Reasoning: Beyond Chain of Thought (CoT). It’s been just 16 months since Google Brain published the Chain-of-Thought paper. I reckon a lot of people are madly brute-forcing the LLM beast to do “some reasoning” by chaining some clever prompts as reasoning steps. Yet, LLMs hallucinate very often and do random reasoning. How to improve CoT and reasoning in LLMs? Let’s see:

CoT and reasoning performance. I think it’s useful to understand where LLMs stand in terms of reasoning performance. See: CoT Hub: A benchmark for measuring LLMs reasoning performance. The benchmark ranks LLMs in complex reasoning tasks like: math, science, and coding.

Process supervision and CoT. Open AI just published Let's Verify Step by Step. This is a clever trick: Train a reward model that provides feedback at each step of the chain of thought to mitigate hallucinations and improve reasoning. Process supervision beats outcome supervision. Blog: Improving mathematical reasoning with process supervision.

Instruction finetuning and CoT. How can we instil the same capability of CoT step-by-step reasoning on unseen tasks into small LMs? In The Chain of Thought Collection, the researchers show that instruction tuning with CoT improves zero shot and few-shot learning capabilities.

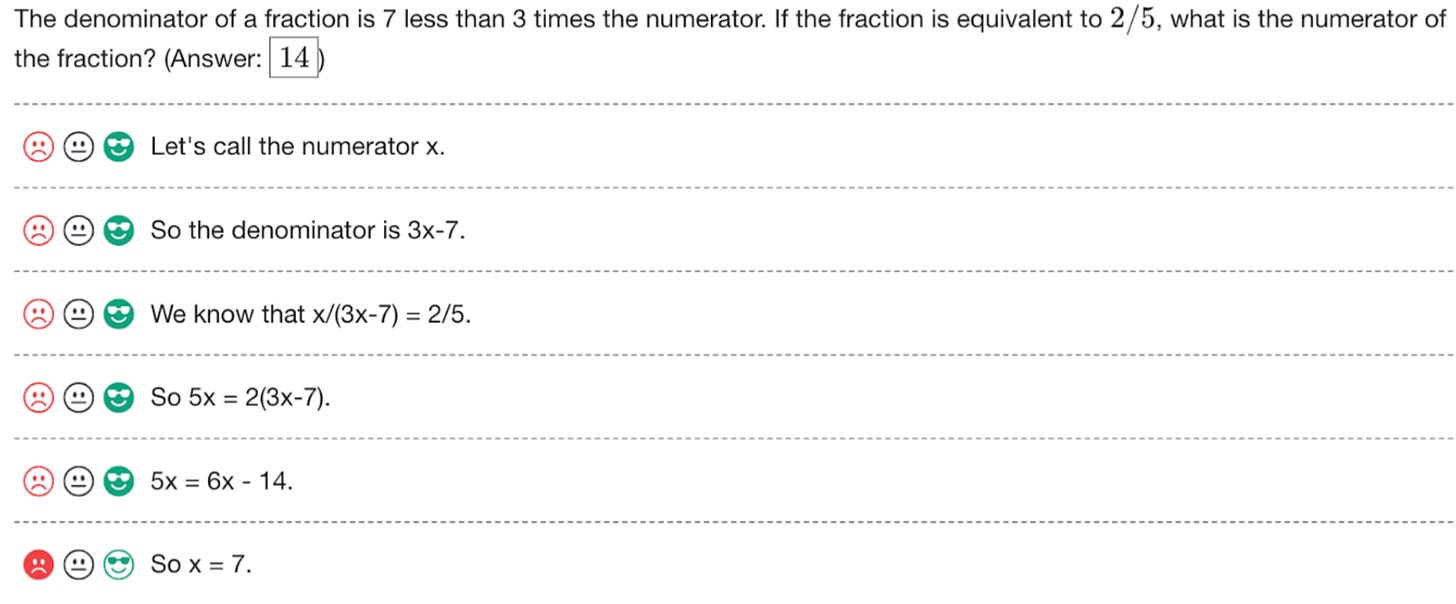

Reversing CoT. A novel method to improve LLMs' reasoning abilities by automatically detecting and rectifying factual inconsistencies in LLMs' generated solutions. Paper: RCoT (Reversing Chain-of-Thought)

Tabular CoT. A new CoT prompting method, which allows to model the reasoning process in a highly structured, tabular format. Despite its simplicity, Tab-CoT: Tabular Chain of Thought has strong zero-shot and few-shot capabilities across many tasks.

Tree of Thoughts (ToT). Deepmind ToT allows LLMs to perform deliberate decision making by considering multiple different reasoning paths. ToT self-evaluates choices to decide the next course of action, as well as looking ahead or backtracking when necessary to make global choices. ToT enhances CoT. Paper code: Tree of Thoughts: Deliberate Problem Solving with LLMs.

Also worth checking out this ToT implementation too: Plug and Play Implementation of Tree of Thoughts

Muti-agents debate improves reasoning. MIT CSAIL’s new approach by which multiple LLM debate their reasoning processes to arrive at a common final answer. This approach significantly enhances math and strategic reasoning across a number of tasks. And it also improves the factual validity of generated content, reducing fallacious answers and hallucinations. Paper, code: Improving Factuality and Reasoning in Language Models through Multiagent Debate

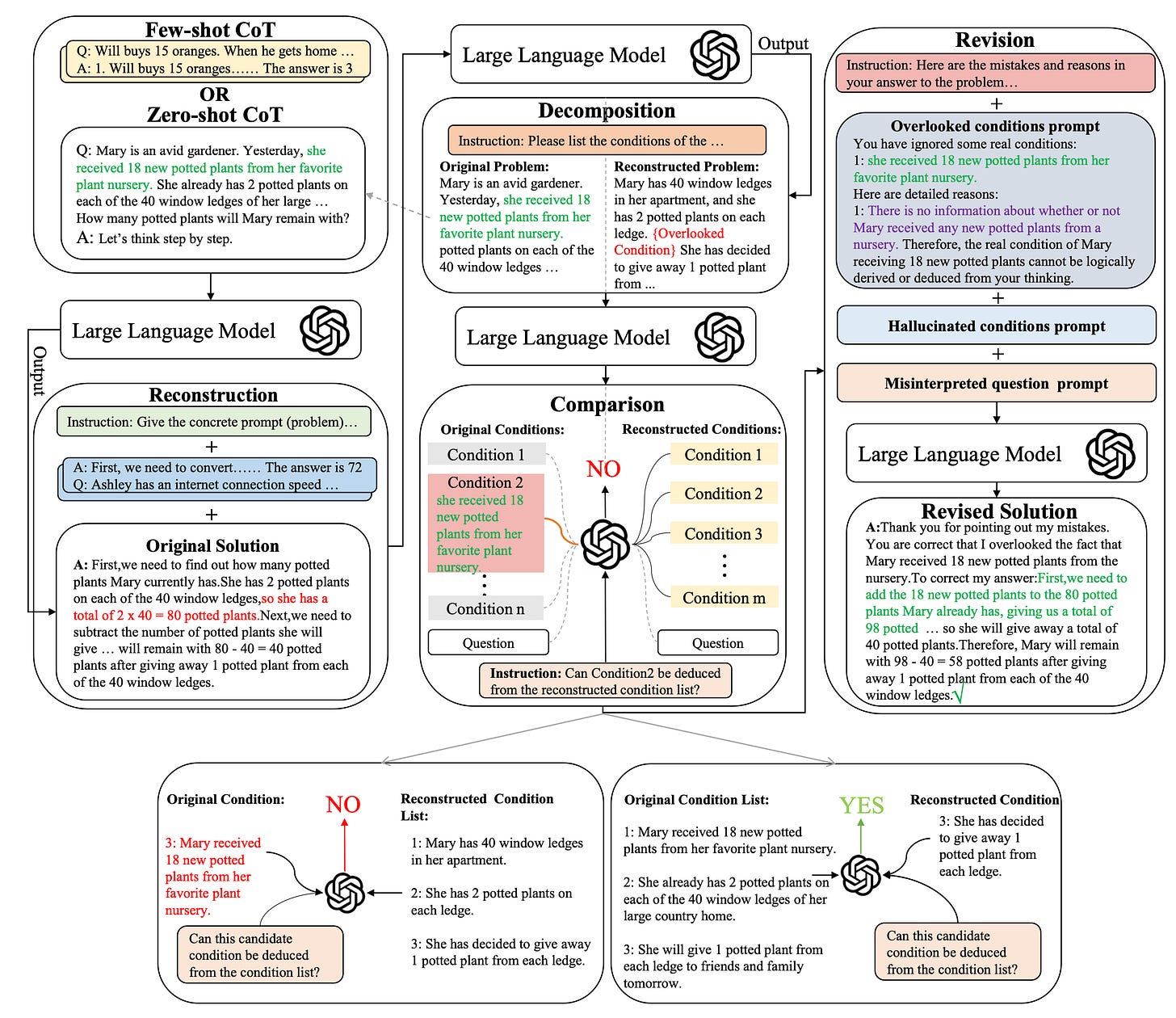

Compositional reasoning. MS Research Chameleon is a new cutting-edge compositional reasoning framework that integrates rule-based modules with LLMs. MSR claims that Chameleon outperforms other LLMs in reasoning. Checkout paper, repo: Chameleon: Plug-and-Play Compositional Reasoning with LLMs

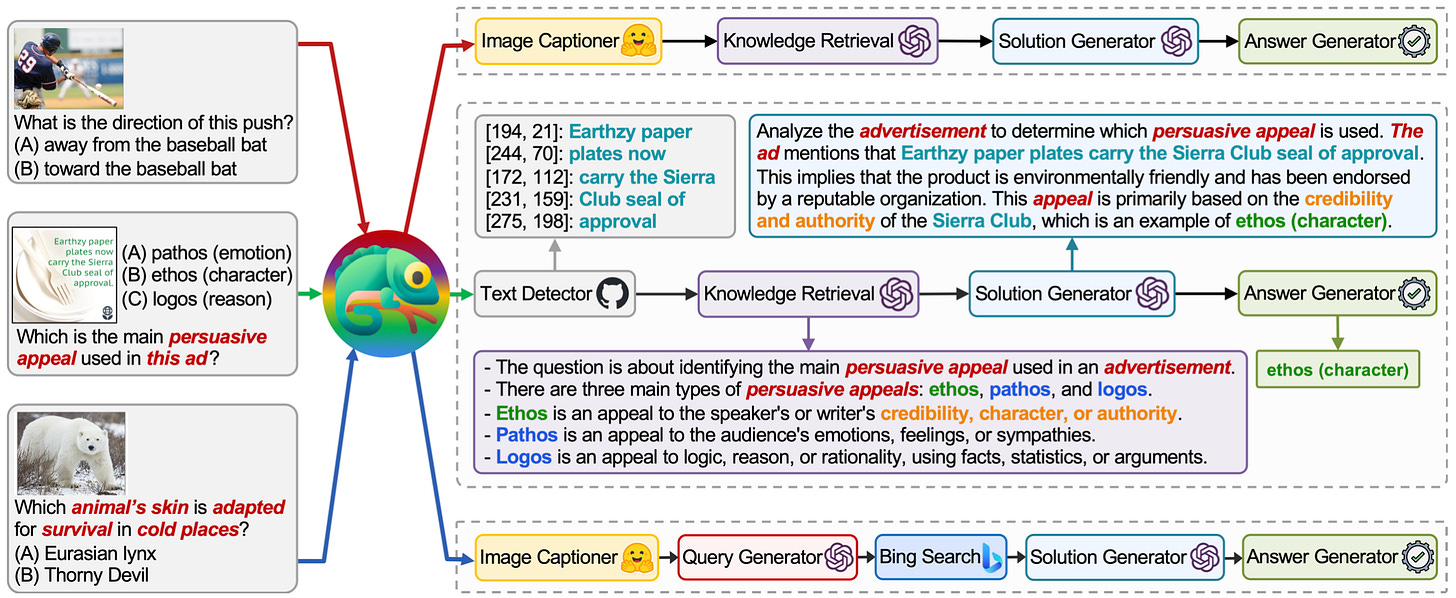

Beyond CoT: Graph-of-Thought. I like this. Graph-of-Thought (GoT) models human thought processes as a graph. By representing thought as a graph, GoT captures the non-sequential nature of human thinking and allows for a more realistic modelling of thought processes. Paper: Beyond Chain-of-Thought, Effective Graph-of-Thought Reasoning in LLMs.

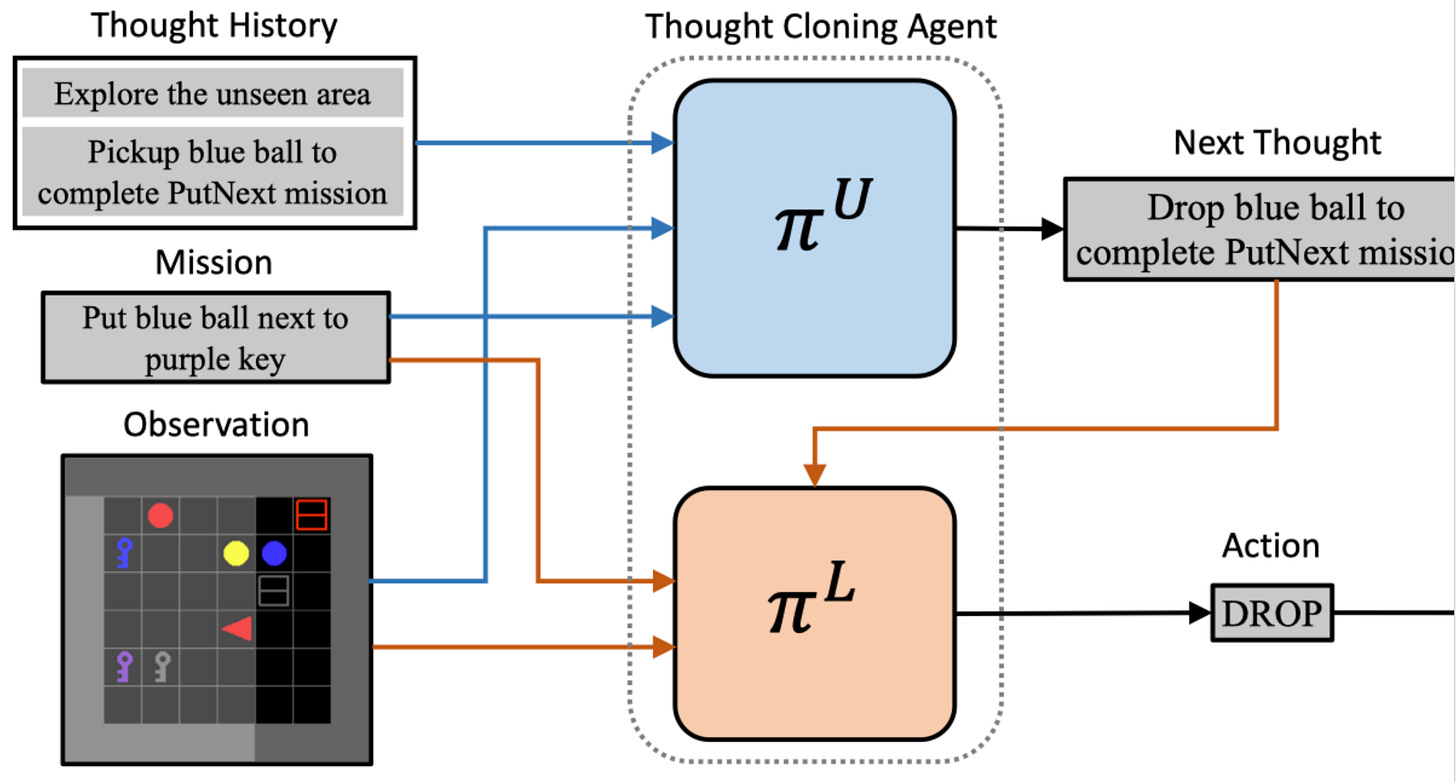

Thought Cloning. Forget CoT. Thought Cloning (TC) is a novel imitation learning framework that enhances AI agent’s capability, to think like humans. Repo, paper: Thought Cloning: Learning to Think while Acting by Imitating Human Thinking.

Have a nice week.

10 Link-o-Troned

the ML Pythonista

ecoute - Real-time, Full-duplex Transcription Powered by Whisper

Macaw: Multi-Modal LM with Image, Video, Audio, & Text Integration

the ML codeR

Deep & Other Learning Bits

AI/ DL ResearchDocs

data v-i-s-i-o-n-s

MLOps Untangled

AI startups -> radar

ML Datasets & Stuff

Postscript, etc

Tips? Suggestions? Feedback? email Carlos

Curated by @ds_ldn in the middle of the night.