Discover more from Data Machina

Data Machina #237

New SOTA AI Code Generation. AlphaCodium. Graph ML 2024. The Modern AI Stack. QAnything AI. Apple AIM. NVDIA QAChat. MetAI Self-Rewarding Models. Dataland. Preference Tuning: DPO, IPO & TKO.

AI Code Generation: A New Paradigm. Several developer surveys indicate that devs -especially Sr. devs- who use AI tools are “more productive.” Today you can use lots of AI tools for code completion, pair programming, data generation, an even having a team of AI coding agents to complete tedious tasks for you.

Back in October, the team at DeepSense wrote an excellent blogpost on the state of the art in AI coding agents, detailing the pros & cos, and workflows of each AI coding agent.

Worth mentioning that -fundamentally- all these AI coding agents are mostly based on prompt engineering, chaining prompts and CoT techniques, and hacking prompts for AI code generation.

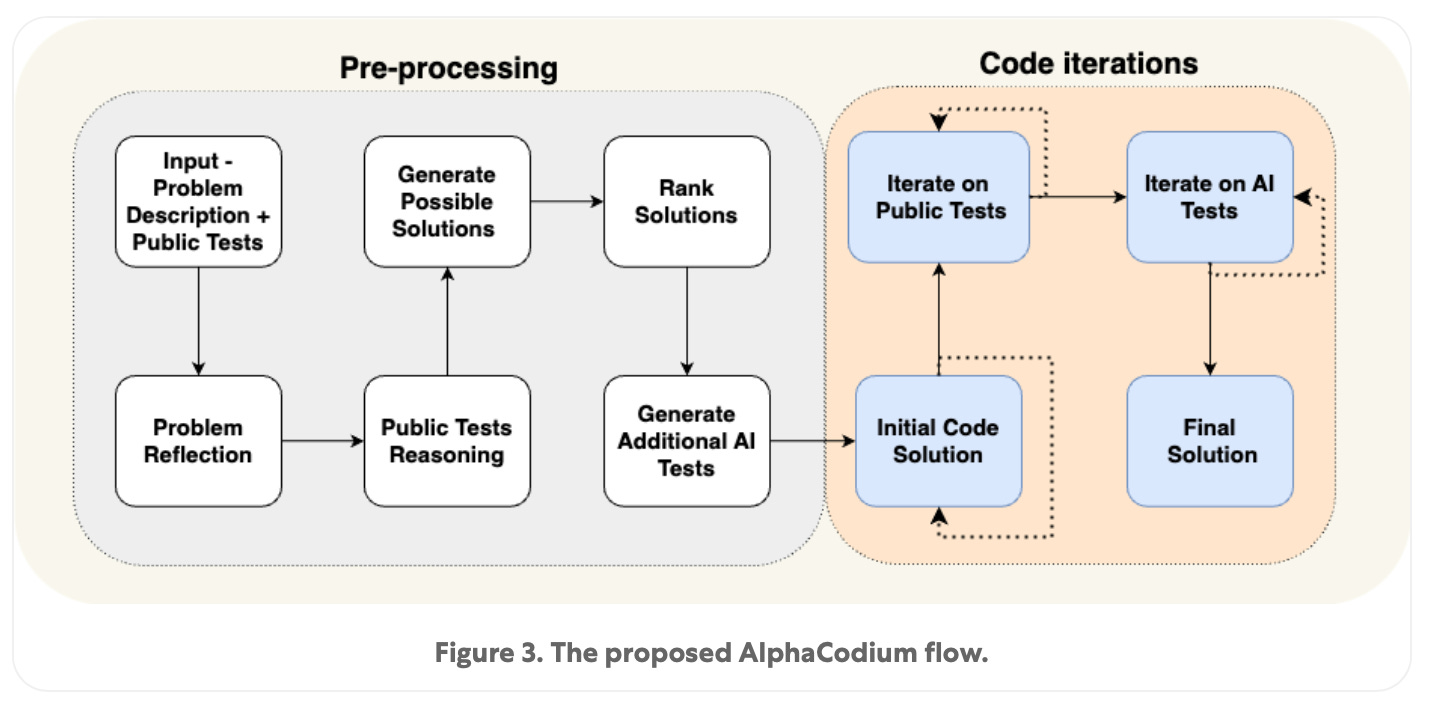

New SOTA AI code generation paradigm. But five days ago, the team at Codium introduced AlphaCodium: a new, SOTA AI code generation approach that implements a test-based, multi- stage, code-oriented iterative flow.

AlphaCodium was tested on the DeepMind CodeContest Dataset, which has +13K competitive coding problems. Some key points about AlphaCodium:

It moves away from the engineering prompt-answer (hacking prompts) basic paradigm to a coding "flow" paradigm, where the AI agent builds and tests the answer (code solution) by using self-reflection and other techniques iteratively

It beats DeepMind AlphaCode2 -SOTA until now- which uses a brute force approach of clustering a few coding solutions among a search space of a million of AI generated coding solutions

It beats CodeChain, another near-SOTA approach which uses a chain of sub-module-based self-revisions

It doesn’t need intensive model fine-tuning and massive computation like other AI code generation approaches

You can read all the details on AlphaCodium in these links:

Paper: Code Generation with AlphaCodium: From Prompt Engineering to Flow Engineering

Repo: Official implementation of "Code Generation with AlphaCodium” paper

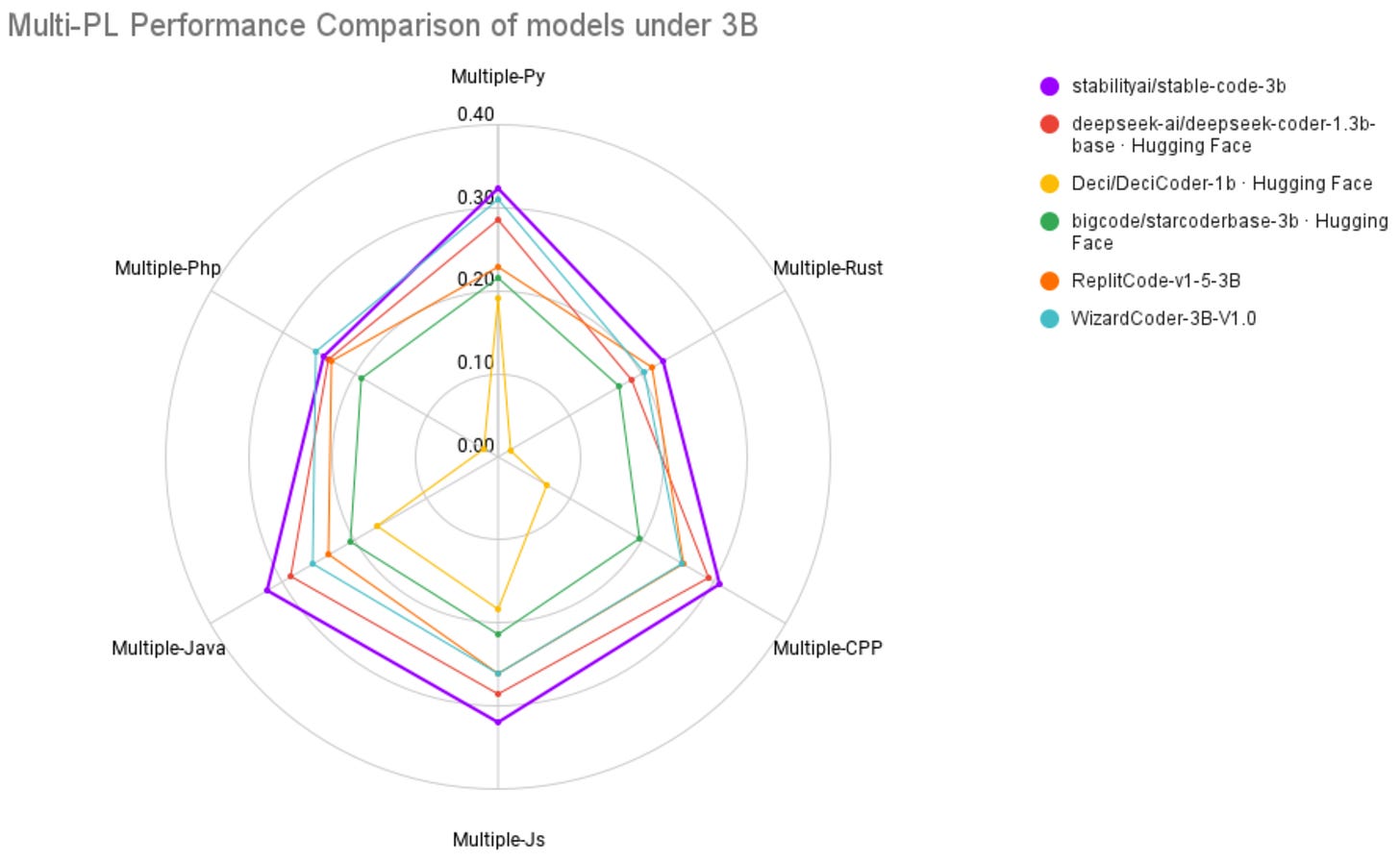

stable-code-3b: A new smallish, SOTA AI code completion model. Stability AI just released stable-code-3b, a 2.7B billion parameter decoder-only language model pre-trained on 1.3 trillion tokens of diverse textual and code datasets. stable-code-3b is trained on 18 programming languages and demonstrates state-of-the-art performance on AI code completion (compared to models of similar size). Blogpost - Stable Code 3B: Coding on the Edge

Programming -not prompting- Foundation Models. Prompt engineering is a hack for NLP tasks that was not originally designed for coding. Standford DSPy is a framework for developing high-quality LM systems. DSPy minimises much of the issues of prompt engineering, hacking prompts, or stuffing everything together into one single clever prompt. DSPy separates the flow of your program (modules) from the parameters (prompt instructions, few-shot examples, and LM weights), which DSPy optimisers can tune if you give them an objective. In this interview below, Omar -the creator of DSPy and ColBERT- discusses the benefits and advantages of DSPy programmatic approach. Highly recommended, it will change the way you think about developing LLM apps.

Have a nice week.

10 Link-o-Troned

Artificial Analysis - Compare AI Models and Hosting Providers

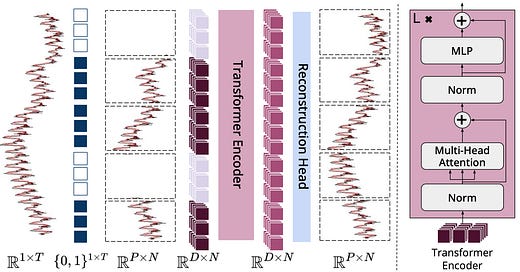

Uber uVitals - Early Anomaly Detection on Multi-Dim Time Series

[free book] The Foundations of Vector Retrieval (pdf, 185 pages)

the ML Pythonista

QAnything AI - Fast, Accurate, Local Q&A on Any Doc/File Format

How to Create Your Own Team of Autonomous Agents with CrewAI

Deep & Other Learning Bits

[new] 2.5x Faster Inference in Mixtral, Phi-2, & Falcon with DeepSpeed

An Overview of RAG with Unstructured Data & Knowledge Graphs

AI/ DL ResearchDocs

Apple AIM: New Frontier Autoregressive Image Models (paper, repo)

NVIDIA ChatQA: A New Conversational QA Model that Beats GPT-4

MetaAI: Self-Rewarding Models with Iterative DPO Beat GPT-4…

data v-i-s-i-o-n-s

MLOps Untangled

AI startups -> radar

ML Datasets & Stuff

The Effect of Intrinsic Dataset Properties on Generalisation (ICLR 2024)

WebSight - 823K HTML/CSS Codes for AI Website Generation from Screenshots

Postscript, etc

Tips? Suggestions? Feedback? email Carlos

Curated by @ds_ldn in the middle of the night.

Subscribe to Data Machina

A weekly deep dive into the latest AI / ML research, projects & repos.