Discover more from Data Machina

Data Machina #259

Prompt Engineering 2.0. Automated Prompt Optimisation. AI Scaling Myths. AGI World Models. What's an AI Agent? GenAI at LinkedIn. Failed AI Projects. Img2Txt2Txt Models. RAGFlow. JEPA Deep Dive.

Prompt Engineering 2.0. Prompt engineering is not going anywhere any time soon. The AI Goliaths have invested 10’s of billions in LLMs & Large Multimodal Models (LMMs), which today -for better or for worse- dominate “modern AI” totally. Due to the way these models were designed and developed, inevitably, to get solid output results from these models you need to instruct them with natural language prompts.

Prompt engineering is like Marmite. Many of my hardcore s/w engineers friends absolutely hate prompt engineering. They dismiss it as “random, unreliable pseudo-programming using English language.” But some love it like Marmite. Regardless, if you are an AI engineer, researcher, dev or business analyst, and you want to stay “relevant in modern AI,” you just can’t ignore LLM/LMM-based R&D. And that involves prompt engineering. So this is my advice to my sceptic friends:

Understand the fundamentals and mindset of prompt engineering. A great post by Eugene. His main point: prompt engineering is about conditioning the probabilistic model to generate the desired outputs; and that requires to follow a systematic, thorough, structured approach. Blogpost: Prompting Fundamentals and How to Apply them Effectively.

Sign up for an advanced prompt engineering course, be picky. There are lots of prompting courses out there. But there aren’t many comprehensive, hands-on, courses on advanced prompt engineering. This is a good one: The Complete Prompt Engineering for AI Bootcamp (June 2024).

And Elvis, the brains behind the great Prompt Engineering Guide (although not fully up to date), recently released an advanced prompt engineering course. Also checkout the latest Anthropic's Prompt Engineering Interactive Tutorial, which is free and great.

Learn the latest prompting techniques. There are ca. 60 or so well known prompting techniques and patterns. New techniques appear ever week! Written by researchers from OpenAI, Microsoft, Stanford et al., the Prompt Report (June 2024) is one of the most comprehensive surveys on prompting techniques. If you don’t have time to read the 75 pages report, Daniel recently wrote a nice blog with his summary and notes on all prompting techniques described in the Prompt Report.

Use specialised tools to orchestrate prompting pipelines. Prompt engineering is a deeply iterative process. This is a good post on a s/w developer reflecting on the prompting iterations and sharing 10 tips just to build a basic LLM-based app. Orchestrating a prompt engineering pipeline from experimentation to production prompts is hard, and requires specialised tools… Questions: How do you test & evaluate prompts? Where do you store your test & prod prompts? How do you do prompting version control? There are lots of prompting tools, but here are a few interesting ones that come to mind:

Prompt Engineering Tool. A few days ago, Tecknium -the brains behind the amazing Nous-Hermes-2-Mixtral-8x7B model- just released a tool that enables you to: 1) test prompts across multiple LLM simultaneously 2) save and load prompt templates, 3) manage variables for dynamic prompt generation, and 3) compare prompt outputs from different models side-by-side

Code2Prompt is a powerful command-line tool that generates comprehensive prompts from codebases

Promtptools is a set of free, open-source tools for testing and experimenting with prompts

Promptmetheus is a prompt engineering IDE that enables you to compose prompts without code, test prompts with different models, an optimise prompts for reliability and cost

OpenPrompt is a library built upon PyTorch that provides a standard, flexible and extensible framework to deploy the prompt-learning pipeline.

promptfoo a tool for building reliable prompts, evaluating them with caching, concurrency, and live reloading, and scoring outputs automatically

Learn the latest jailbreak prompting techniques. The idea here is that you learn how to protect your LLM-based app against adversarial jailbreak attacks by learning how these jailbreaks are crafted with sophisticated English prompts. The venerable Pliny the Prompter @elder_plinius maintains a repo with proven, working jailbreak prompts for ALL major foundation models from OpenAI, Cohere, Google, Microsoft and Anthropic, including “God Mode” system prompts.

Learn prompting techniques that decrease costs and improve performance. You need to find a way to get accurate prompts without impacting cost, speed, and performance. Jan wrote about 5 techniques to streamline prompts, while decreasing costs without sacrificing accuracy. Blogpost: Streamline Your Prompts to Decrease LLM Costs and Latency. And Justin, recently posted about how to use Prompt Decomposition to avoid high costs, low latency and low accuracy.

Get into Automated Prompt Optimisation (APO) soon. Once you realise that developing reliable, production LLM-based apps requires solid prompt engineering skills, tools and methods, it’s time to get into automating & optimising prompts. There is a lot of emerging research on this, and several new tools are appearing in the landscape:

The Automatic Prompt Engineer (APE) and DeepMind ORPO were one of the first papers on methods for automatic prompt generation, selection and optimisation

AutoPrompt is a prompt optimisation framework designed to enhance and perfect your prompts for real-world use cases. It automatically generates high-quality, detailed prompts tailored to user intentions, and employs refinement (calibration) process

Automating Prompt Engineering with DSPy and Haystack. An interesting post on how to use DSPy to automate prompt engineering. DSPy separates the flow of your program from the prompts and weights at each step, while it runs optimisers, that tune the prompts and/or the weights of your LM calls

Cracking the Code: Automated Prompt Optimisation. The team at Martian, surveyed several companies using advanced prompting techniques. They argue that Automated Prompt Optimisation (APO) is the way to resolve key issues like model variability, drift, and “secret prompt handshakes”. And they also share innovative techniques used to address these challenges, including LLM observers, prompt co-pilots, and human-in-the-loop feedback systems to refine prompts

Optimising Instructions and Demonstrations for Multi-Stage Language Model. A new paper from researchers at Berkeley, Stanford et al. introducing MIPRO, a new prompt optimisation method for LM programs. MIPRO -implemented in DSPy- outperforms baselines on five of six diverse LM programs using a best-in-class open-source model like Llama-3-8B.

Have a nice week.

10 Link-o-Troned

the ML Pythonista

N-BEATS: 1st DL Model that Works for Time Series Forecasting

RAGFlow: An Open-source RAG Engine for Deep Doc Understanding

Deep & Other Learning Bits

Why You (Currently) Don’t Need DL for Time Series Forecasting

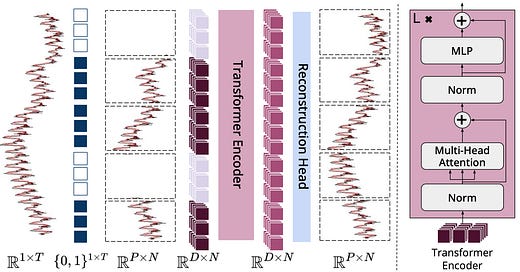

A Deep Dive into JEPA: Yann Lecun’s Alternative to Transformers

Intro to Matryoshka Representation Learning with OpenAI & Weviate

AI/ DL ResearchDocs

Apple 4M: An Any-to-Any Vision Model for Tens of Tasks & Modalities

Cambrian-1: A Family of Open Vision-Centric Multimodal LLMs (paper, repo)

MLOps Untangled

ML Datasets & Stuff

MINT-1T: A Open-source Multimodal Dataset with 1 Trillion Tokens

How to Think about Creating a Dataset for Finetuning Evaluation

Postscript, etc

Tips? Suggestions? Feedback? email Carlos

Curated by @ds_ldn in the middle of the night.

Subscribe to Data Machina

A weekly deep dive into the latest AI / ML research, projects & repos.